Back to business. I did some simple tests to export (animated) scenes and objects from 3ds Max to Ogre, which worked fine. Since I do not have any 3ds Max skills, I left it at that, for now. But learning the basics of 3ds Max has been high on my list of 'things to do or learn before I die'. However I've always put it off because of the intimidating graphic user interface of 3ds Max (I'll spare you another rant here ;-). So in the next few weeks/months I will spend a few hours every week to try to grasp it.

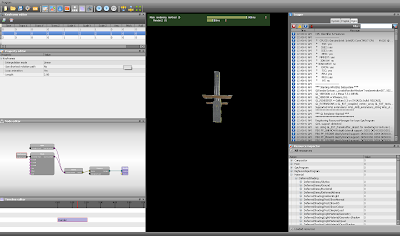

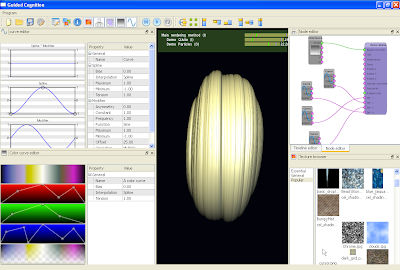

Last week I spent two evenings implementing a script editor. The script editor allows you to edit, well, scripts! It supports syntax highlighting (currently only shaders in cg, hlsl, glsl syntax), but it can easily be extended to support all types of Ogre scripts (e.g. materials). Furthermore the script editor allows you to recompile scripts on the fly, while displaying a 3d scene. I finalized this script editor during @party, and to test whether it worked I ported some Shader Toy [iquilezles.org] shaders. There still are some issues to work out, but they are indicative of bigger 'untackled problems': even though the tool can deal with script compilation errors, and as a result, just will not display any shaded geometry on screen, it is possible for Ogre to throw an exception when loading a (successfully compiled) shader. Exception handling is something I have not fully implemented yet, but which is high up on my 'todo' list.

Without further ado, here is my updated todo list:

- Testing, testing and more testing!

- Learn some basic 3ds Max or Maya modeling skills, so I can test scene export/import features

- Come up with an exception handling mechanism, to catch exceptions that originate from user-initiated actions. Such as: script compiler errors, setting invalid parameter values through the graphic user interface, and so on.

- Improve colorcurve editor: Replace the current graphic user interface, which requires you to animate individual red, green and blue curves to create a color gradient, with another interface where you can put colors at specific time points.

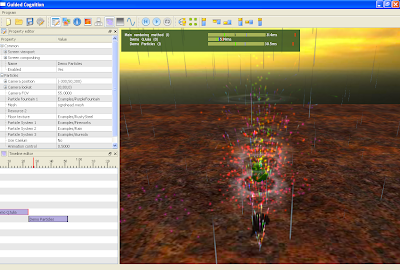

Note: I am giving myself a break from working on the 'nuts and bolts' of the tool, and started implementing a GPU based particle renderer :-) Between that digression, learning basic 3ds Max skills, working on and around the house, and the friends and family that are coming over to visit us during the next few weeks, I do not think that I will have much time to work on anything else. Oh well :-)

... to be continued ...